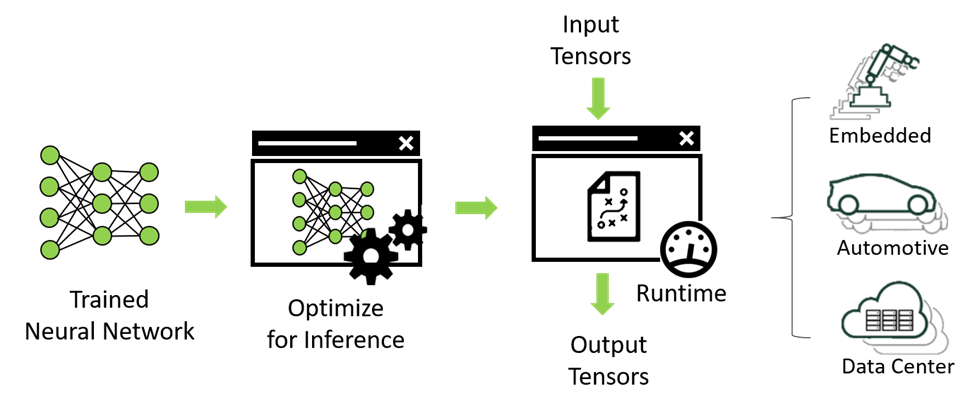

The final stage of deep-learning development process is deploying your model at a specific target platform. In real-world applications, the deployed model is required to execute inferences in realtime or higher speed, and the target platform might be very resource-limited, for example, embedded system such as automotive or robot platforms. However, the trained model, in general, does not satisfy the performance requirement in production. In the worst case, the model might not be run on the target platform because of memory limitation of the target system. So, a proper optimization of the trained model to improve performance is essential for deployment.

How to Optimize Your Model for Inference?

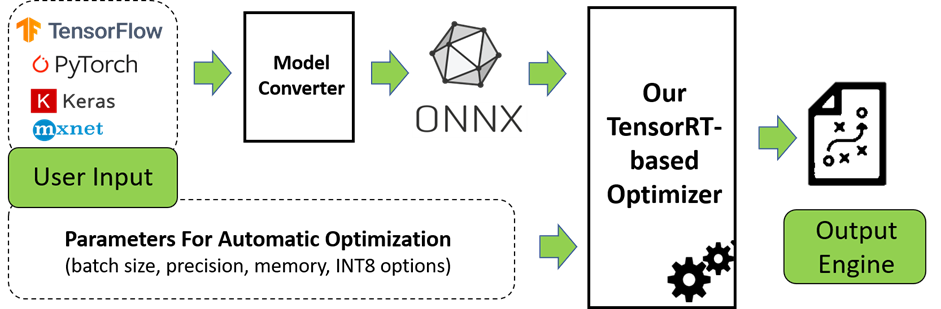

We are developing a cloud-based inference optimization service based on NVIDIA’s TensorRT. Our optimization service provides deep-learning developers with an easy and efficient way to optimize and deploy their model trained from popular deep-learning frameworks on a specific target platform with an NVIDIA GPU. With our optimization service, deep-learning developers can keep focusing on model training process as before. This can improve efficiency significantly in your deep-learning development process.

Main Features of Our Inference Optimization Service

The core of our inference optimization service consists of two things : TensorRT-based inference library and workflow engine on Kubernetes cluster. The main features of our optimization service are as follows:

- Automatic inference optimization with intuitive GUI

- Easy INT8 optimization

- Support models from popular deep-learning frameworks(TensorFlow, PyTorch, MXNet)

- Support various NVIDIA GPUs as target platforms

- Generation of the optimal inference engine with high performance and low accuracy degradation

- On-demand technical service for non-standard or custom models

Our optimization service will be an excellent solution for you if

- you hope that someone else or tools can help you to deploy your DL model and you just want to focus on model training for efficiency

- INT8 optimization is essential for your project and at the same time, you don’t want significant accuracy loss in optimization

- you want to build and test an optimized engine for a target platform which you don’t have

- trtexec cannot build an inference engine for your custom model

What is TensorRT?

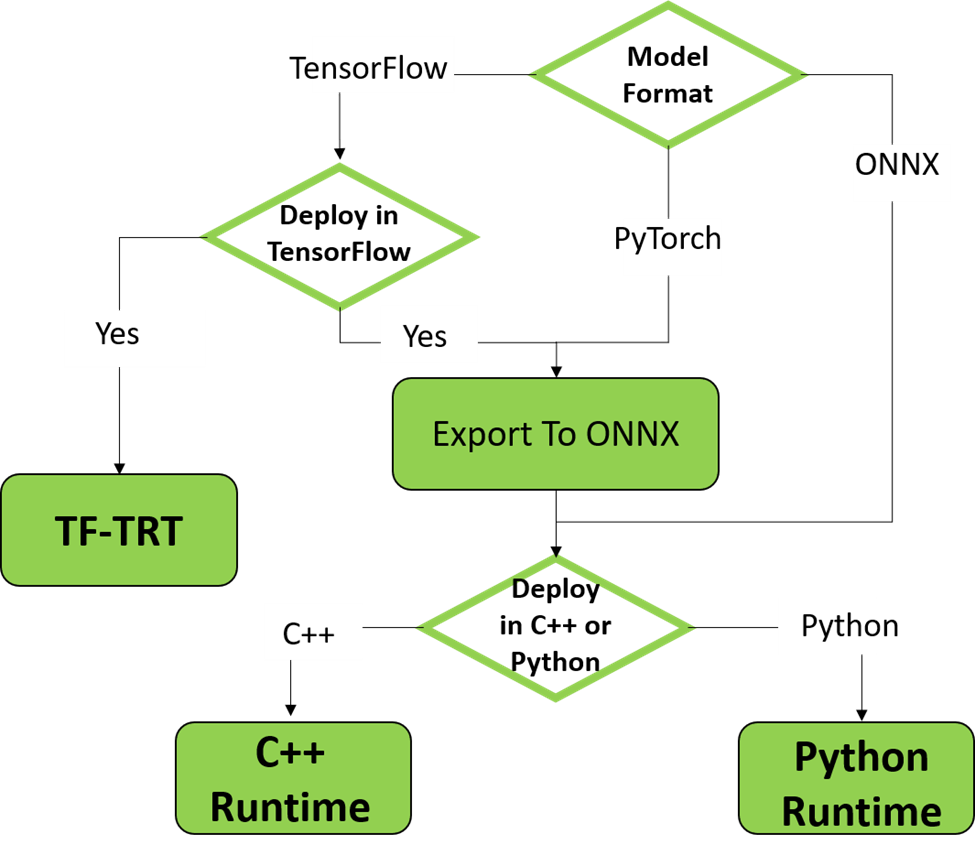

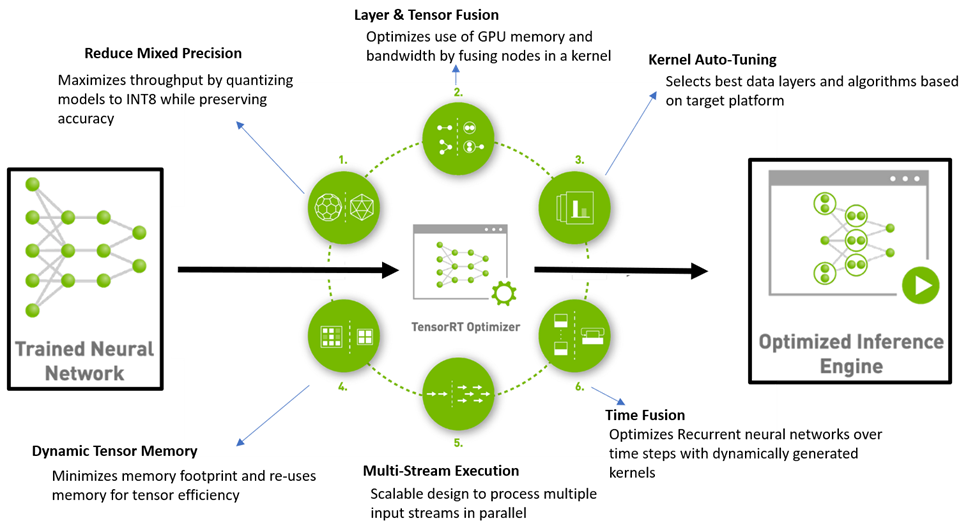

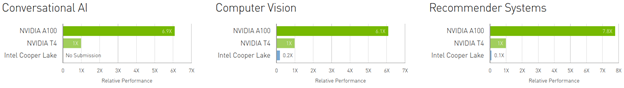

Our inference optimization library depends on TensorRT which is an NVIDIA’s SDK for high performance deep-learning inference. Deep-learning developers can optimize their model for inference on a specific target platform using TensorRT API written in C++/Python. If you are using TensorFlow in model training, you can execute high performance inference using TF-TRT, a TensorRT integration to TensorFlow. TensorRT is the de facto standard inference optimization library, which is easily proved in the recent MLPerf Inference v1.0.

Some Use-Cases of TensorRT

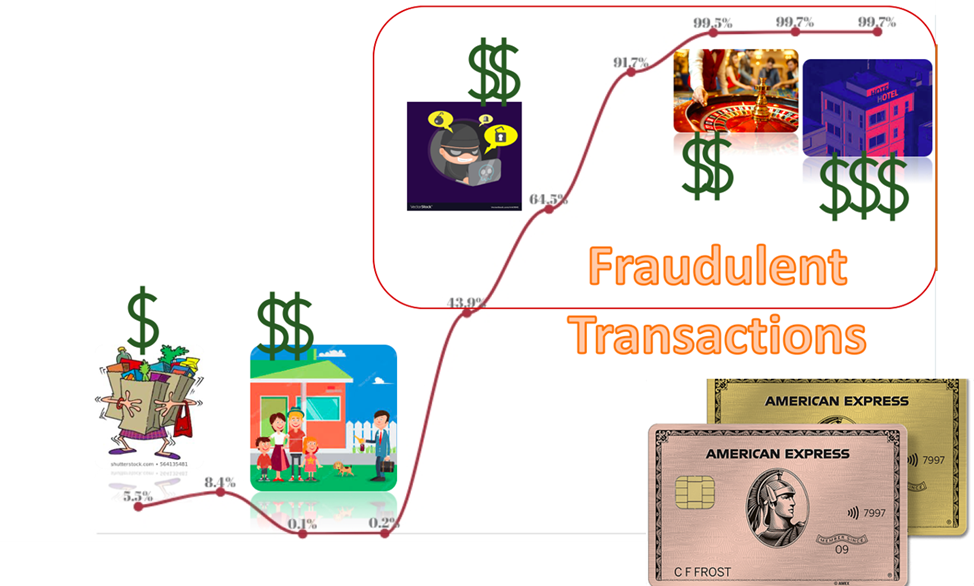

American Express had deployed deep-learning-based models optimized with NVIDIA TensorRT and running on NVIDIA Triton Inference Server to detect fraud. “Our fraud algorithms monitor in real time every American Express transaction around the world for more than $1.2 trillion spent annually, and we generate fraud decisions in mere milliseconds”, said Manish Gupta, vice president of Machine Learning and Data Science Research at American Express.

(See https://blogs.nvidia.com/blog/2020/10/05/american-express-nvidia-ai/)

“By introducing an NVIDIA GPU to the HPE Edgeline EL4000 chassis and optimizing network performance with NVIDIA TensorRT, inference latency decreased from 4165 to three milliseconds. This translates to almost 1400 times better performance.” Kemal Levi, Founder and chief executive officer in Foxconn.

(See https://media.utc.watch/company_media/HPE_case_study_Relimetrics.pdf)

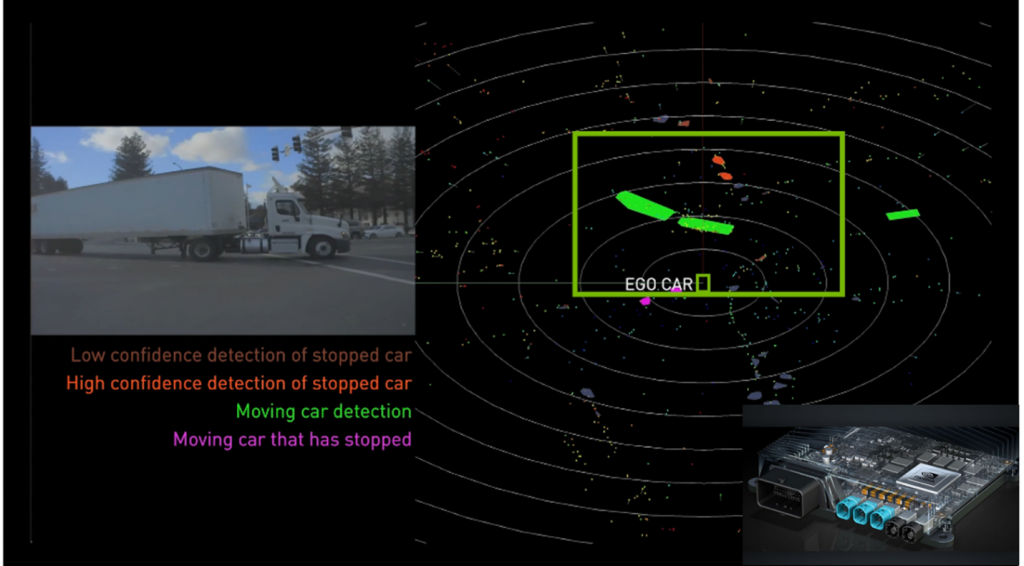

“Building safe self-driving cars is one of the most complex computing challenges today. Advanced sensors surrounding the car generate enormous amounts of data that must be processed in a fraction of a second. That’s why NVIDIA developed Orin, the industry’s most advanced, functionally safe and secure, software-defined autonomous vehicle computing platform”

(See https://blogs.nvidia.com/blog/2021/04/12/volvo-cars-extends-partnership-nvidia-drive-orin)

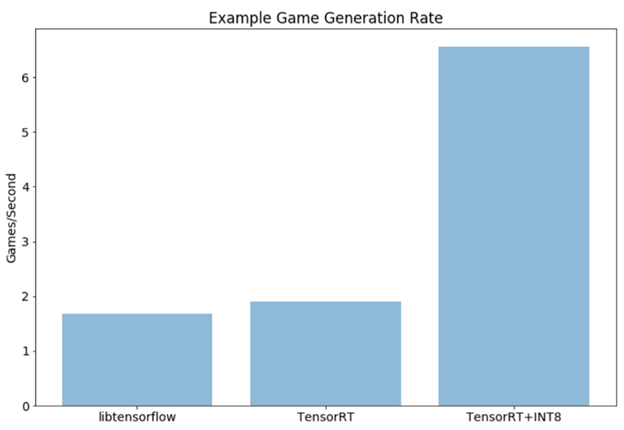

In the reinforcement training of AlphaZero, there is a bottleneck which is caused by frequent inferences in auto game play. It has been reported that 4 times faster inference can be obtained by TensorRT-based INT8 optimization.

(See https://medium.com/oracledevs/lessons-from-alpha-zero-part-5-performance-optimization-664b38dc509e)

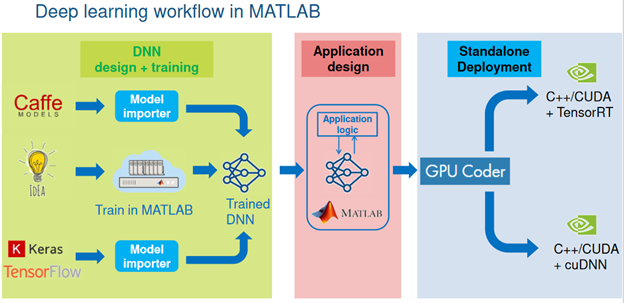

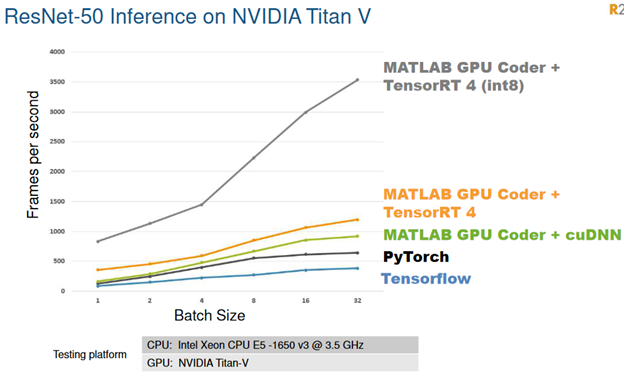

In MATLAB, TensorRT had been integrated to GPU coder in deep-learning workflow to improve inference performance at deployment.