Where comes the great advantage using Cloud

The main reason for customers migrating their systems and services to Public/Private cloud comes from Scalability and Availability. Scalability reduces the cost of operating and maintaining the idle H/W and S/W resources; while Availability promises their service is always up regardless of hardware and software failures. Availability always comes the first because instable scalability is worthless.

To achieve the Goal of Availability

In Openstack, the studies for vmha is being delivered by Masakari project. It can integrate with all openstack deployment project from costless openstack-ansible to commercial Redhat Openstack where it is named as InstanceHA. If your openstack infrastructure required to meet the SLA, Masakari is the must option you should take.

Masakari Component

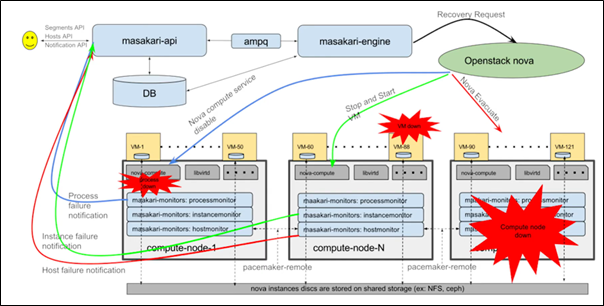

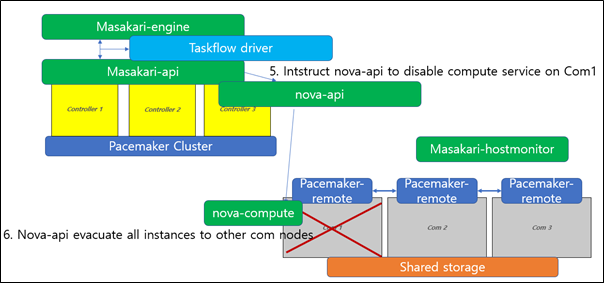

Masakari is composed of openstack-bound and pacemaker-bound. Refer to below image for overview.

Openstack-bound configuration

This is what original masakari project deploys with. Uptill openstack-ansible Ussuri release(21.2.5), masakari ships masakari-api, masakari-engine, masakari-monitors, and the DB configuration scripts. Only with these component, masakari cannot function properly. For example, when a compute node fails, the maskari-hostmonitor need to notify the event to the masakari-api. Then, masakari api can call the rest of procedure for evacuation. However, without pacemaker involved, the masakri-hostmonitor never aware of the failing host.

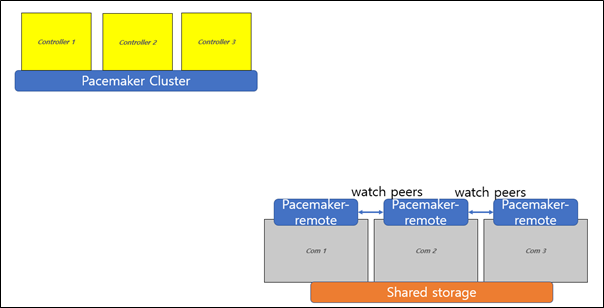

pacemaker-bound configuration

This is where pacemaker place in.

1. The pacemaker-remote process running on compute nodes acknowledges their neighbor nodes’ failure, 2. report this to the pacemaker cluster—which is configured on 3 controller nodes.

- The pcs cluster marks the node down, then toss the information to masakari-hostmonitor.

4. Now, the hostmonitor can notify that its peer is down to the maskari-api. Masakari-api takes this job and proceeds to step 5.

- masakari-api now takes the orchestrator’s role. It instructs nova-api to disable compute service running on the failed node.

6. When done, it has nova-api evacuate the candidate instances to the alive nodes with the assistance of maskari-engine and taksflow driver.

This is the basic concepts of the automatic host evacuation that Masakari supports. Now, let’s takes the drill.

Deploy Masakari by openstack-ansible

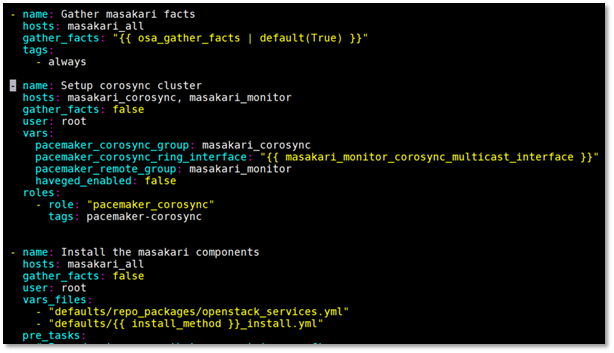

It is up to you whether you will configure pacemaker separately, or integrate with openstack-ansible masakari role. I’ve integrated it with os-masakari-install.yml, where you see the new ansible task named “Setup Corosync cluster” inserted in-between original tasks. The thing is you should separate pacemaker installation and pacemaker-remote installation between the controller nodes and compute nodes; this is done by placing them each in masakri-corosync group and masakari-remote group, respectively. If necessary, you can clone the pacemaker_corosync role from this url: “https://github.com/noonedeadpunk/ansible-role-pacemaker_corosync”

Test Scenario

Initial status

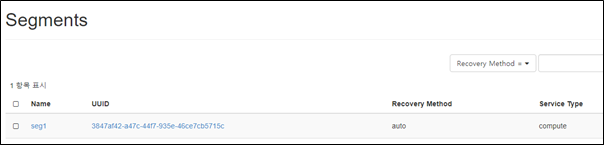

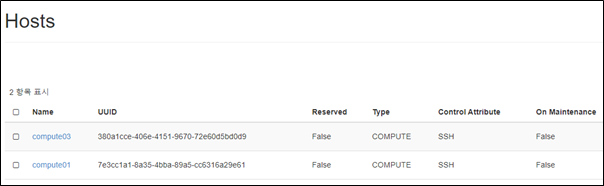

Now that your masakari is ready, get to the basic test scenario saying “a compute node is erratically powered off” so the instances in the compute node should survive. Your segment selected auto recovery method and has two compute node members: compute01 and compute03.

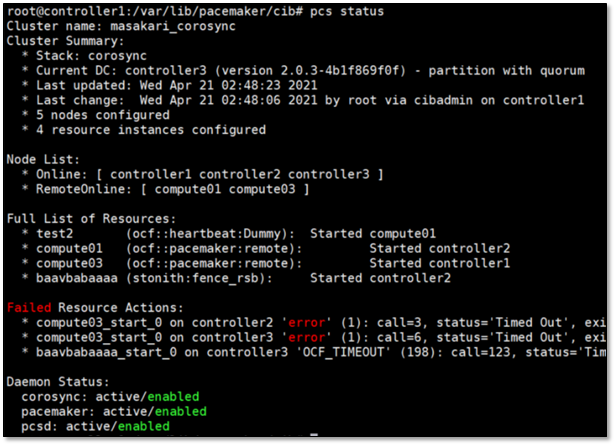

If you have correctly added the compute nodes as a pacemaker-remote resource, pcs status will display both compute nodes ‘Started’.

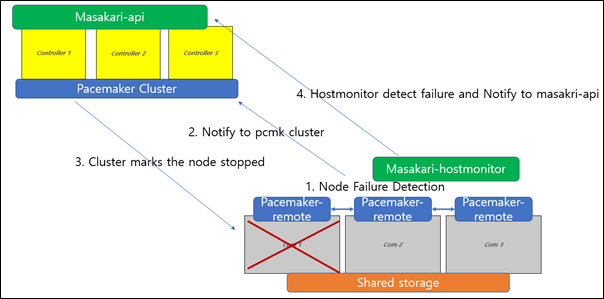

Crash the compute node

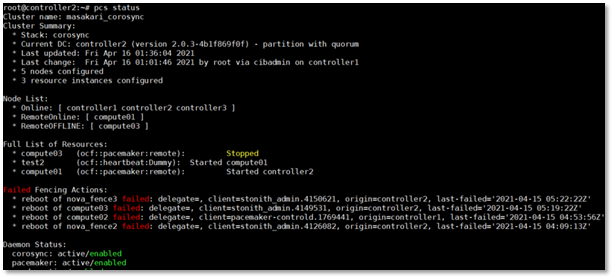

To simulate a compute node power crash, I’ve powered off the compute03. Soon, the compute03 resource is marked ‘Stopped’, since pacemaker-remoted service on compute01 has reported peer’s status change to the pacemaker cluster.

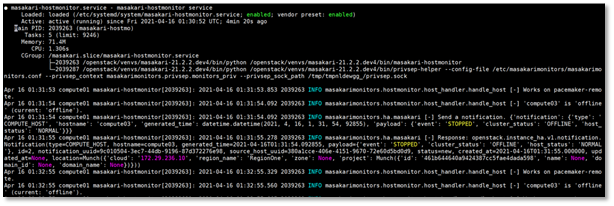

Then, the masakari-hostmonitor running on compute01 will realize peer’s failure because it is running on the pacemaker-remote. You will see it send a notification to the base-ship.

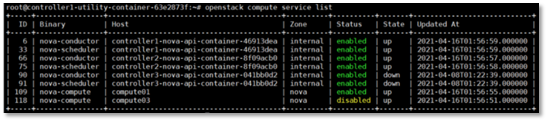

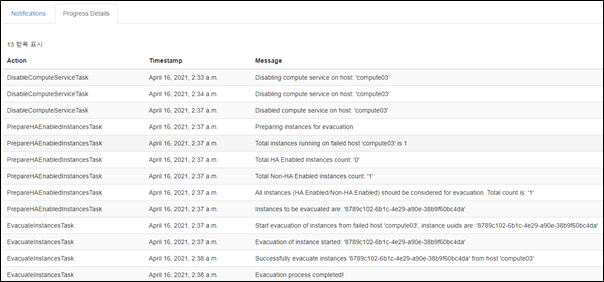

Following the appropriate steps, nova-api will disable the compute service, and also will recognize the service is down in time.

Now that evacuation condition is met, nova-api will evacuate all proper instances to the alive nodes upon instruction off masakari-api and process off masakari-engine’s taskflow driver.

Conclusion

I’ve illustrated a very simple scenario. More complicated setting may be required upon customer’s requirements. Stonith fencing setting is required when shared storage is used for instance volume to avoid the split brain. And try to apply masakari-processmonitor and the masakari-instancemonitor. These will enhance the availability of your openstack infrastructure.