Triton

Open source software for creating private and public clouds

Nvidia Triton Inference Server

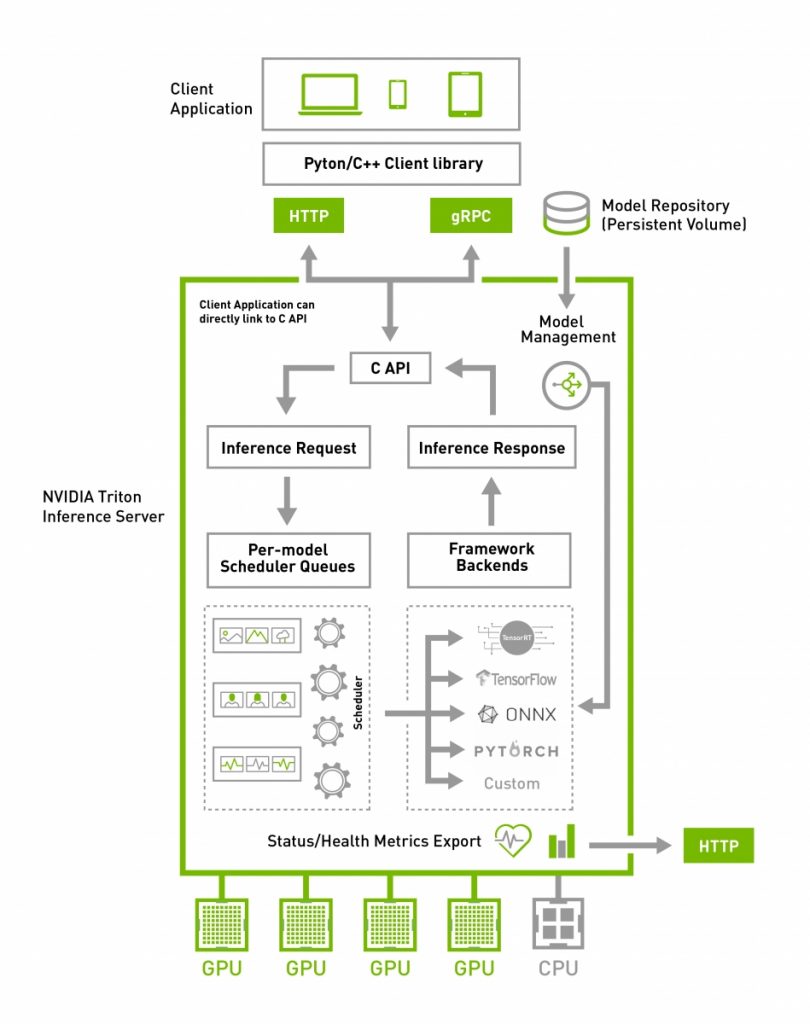

NVIDIA® Triton Inference Server (formerly NVIDIA TensorRT Inference Server) simplifies the deployment of AI models at scale in production. It is an open source inference serving software that lets teams deploy trained AI models from any framework (TensorFlow, TensorRT, PyTorch, ONNX Runtime, or a custom framework), from local storage or Google Cloud Platform or AWS S3 on any GPU- or CPU-based infrastructure (cloud, data center, or edge).

– nvidia.com

Triton Features

Support for Multiple Frameworks

High Performance Inference

Designed for IT and DevOps

Triton Inference Server supports all major frameworks like TensorFlow, TensorRT, PyTorch, ONNX Runtime and even custom framework backend. It provides AI researchers and data scientists the freedom to choose the right framework.

It runs models concurrently on GPUs maximizing utilization, supports CPU based inferencing, offers advanced features like model ensemble and streaming inferencing. It helps developers bring models to production rapidly.

Available as a Docker container, it integrates with Kubernetes for orchestration and scaling, is part of Kubeflow and exports Prometheus metrics for monitoring. It helps IT/DevOps streamline model deployment in production.